In the wild, shifting world of mental health therapy, artificial intelligence (AI) is starting to carve out its own space, wriggling into our brains, metaphorically speaking. One big hurdle many of us run into is self-sabotage. You know, that pesky habit that can slam the breaks on personal growth like nothing else. This piece—yes, written by me—is gonna meander through how AI is attempting to tackle self-sabotage. Spoiler alert: there’s promise!

Table of Contents

- Self-Sabotage: What’s That, Really?

- What Sparks Self-Sabotage?

- Enter: AI Therapy

- AI’s Role in Kicking Self-Sabotage Out the Door

- The Messy Bits

- Peering into AI’s Future

- Wrapping Up (Or, Almost)

Self-Sabotage: What’s That, Really?

OK, so let’s talk about self-sabotage for a moment. Basically, it involves those behaviors and thought patterns that hold us back from hitting those big—sometimes pie-in-the-sky—goals. And it can sneak up on you in forms like procrastination (guilty!), self-doubt, and that evil twin, perfectionism. I once read in the Journal of Personality and Social Psychology—mind you, waaaay back in 1978—that self-sabotage often stems from this mix of fear of failing and low self-esteem. People at the time (Jones & Berglas were the names I think?) noticed that folks who go down the self-sabotage road set completely unrealistic goals for themselves. Like trying to write a novel in a weekend… yeah right!

What Sparks Self-Sabotage?

- Crippling Fear of Failure: Some folks prefer the “I didn’t even try” badge over condemning themselves to possible defeat.

- Reflecting Pool of Low Self-Esteem: And then you have the doubters—those nagging voices never think they’re good enough.

- Perfectionism Overdrive: Ever had a project you just couldn’t start because it had to be flawless? Totally paralyzing.

- Baggage from Childhood: Sometimes our early days can bind us with these beliefs—we aren’t worth it, we CAN’T possibly succeed!

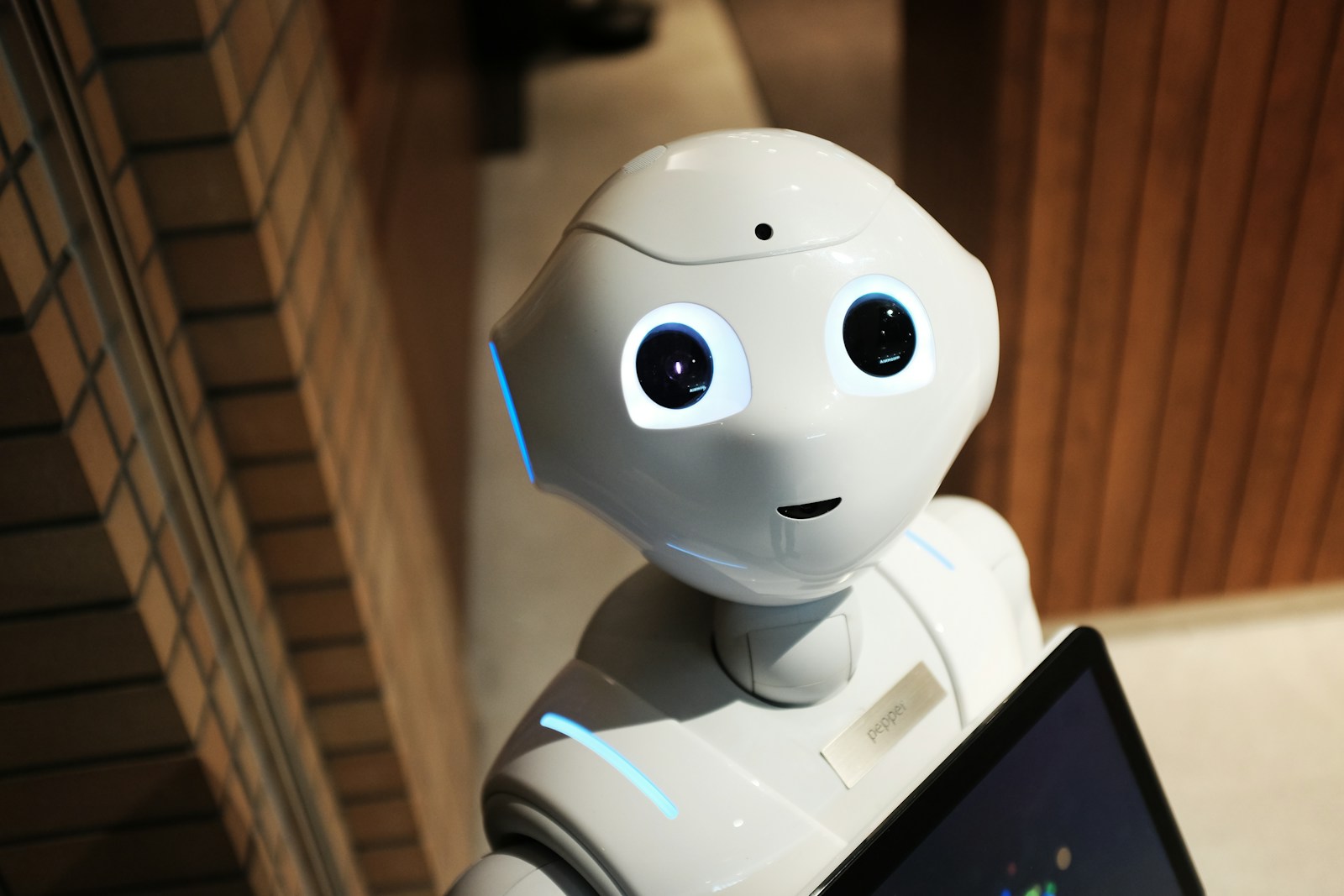

Enter: AI Therapy

Through the use of machine learning and natural language prowess, AI therapy is forging ahead. This tech is set up to mimic human conversation—but without the eye-rolls (though it might pause a bit awkwardly now and then). Over at McKinsey & Company, there’s talk—back in 2018, I remember reading—that AI could really shake things up by improving access to, well, brain care, especially where traditional methods fall short.

What’s in it for Us with AI Therapy?

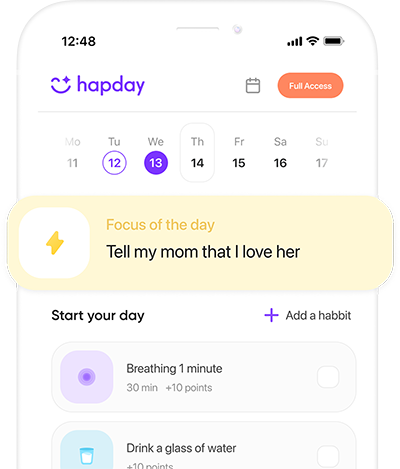

- Always There: AI therapy is like the buddy who never sleeps. Midnight worries? The AI’s got your back.

- No Wallet Drain: Let’s face it, therapy’s not cheap. Most AI solutions are easier on the bank.

- Anonymous Hug: Engage in your PJs—no one even knows it’s you.

- Reliably Consistent: Unlike some therapists having a bad day, AI stays on its game.

AI’s Role in Kicking Self-Sabotage Out the Door

Here’s the bit we’ve all been waiting for—how AI therapy serves up helpings of anti-sabotage goodness:

1. Tailored to You

AI platforms can peek into your brain and say, “Hey, here’s where you keep getting tripped up!”—creating a detailed assessment.

2. CBT in the Digital Space

Cognitive Behavioral Therapy (CBT) is all the rage, and rightfully so. And AI’s there, helping restructure those pesky negative thoughts. Somewhere in the Journal of Anxiety Disorders, they noted the effectiveness of such digital CBT approaches. Could be magic!

3. Quickfire Feedback

Sometimes, we need someone—or, erm, something—to catch us red-handed in our self-sabotaging ways. That’s AI’s forte, apparently.

4. Compassion Cultivation 101

AI encourages self-love—because who doesn’t need a reminder to be kind to themselves?

My Own Woebot Encounter

I had a brief dalliance with Woebot—a small step for man, one giant leap for my mental health! Using this AI buddy, which some Journal of Medical Internet Research folks found effective, I felt a bit less alone tackling my own challenges.

The Messy Bits

Don’t get me wrong, AI therapy isn’t without hiccups. Privacy issues—those still give folks pause. And while helpful, they’re not full-on human therapists. For complex issues, a real-life pro might still need a call.

Ethical Quagmires?

Let’s be clear, ethical landmines pop up, requiring developers to go that extra mile to ensure fairness and guard against biases.

Peering into AI’s Future

It’s gonna be a ride watching AI therapy grow—perhaps blending with virtual reality someday to make the experience even more… immersive? Trolls on Reddit seem to think so, anyway—can’t say they’re wrong!

Digital Coaches?

The idea of AI as a life coach is no longer sci-fi, but reality—or is it?

Wrapping Up (Or, Almost)

So where are we at? AI therapy offers a fresh approach—cost-effective and ever-present at the press of a button! With tech advancing faster than my coffee gets cold, it’s only gonna grow as a cornerstone in mental health. Who’s to say AI won’t change the game of self-sabotage prevention? Ready to give it a whirl and see what unfolds.

This article sheds light on a fascinating intersection between AI and mental health. The potential of AI to combat self-sabotage is intriguing. It’s about time we embrace technology in therapy. I believe this could lead to groundbreaking advancements in how we approach mental health, making support more accessible for everyone.

While the idea of AI therapy sounds appealing, it feels a bit gimmicky. Can a program really understand human emotions? This seems like just another trend to cash in on vulnerable people seeking help. I remain skeptical about the effectiveness of an algorithm versus a trained therapist.

The concept of using AI for mental health issues is grounded in interesting research, particularly around Cognitive Behavioral Therapy (CBT). While it’s promising, I think it’s essential to consider the limitations and ethical implications as well. Relying solely on AI might not address deeper issues that require human empathy.

I completely agree with you! AI can assist but should never replace human therapists entirely. The nuances of human experience are often lost in translation with machines.

‘AI will save us from ourselves!’ is quite a bold claim! We mustn’t forget that technology has its own biases and limitations. Self-sabotage is a complex issue stemming from deeply rooted personal experiences that an algorithm simply cannot replicate or fully understand.

‘AI therapy’? Sounds like something out of a sci-fi movie! But honestly, if my robot friend can help me avoid procrastinating on writing that novel I’ve been putting off for years, I’m all for it! Just picture it – me yelling at my computer while an AI keeps me focused!

‘An anonymous hug’ from AI? Really? It seems like they’re selling this concept way too hard without addressing major privacy concerns. Trusting my deepest secrets to an algorithm feels risky at best; I’d much rather talk to someone who understands what I’m going through.

‘Digital coaches’? What’s next, virtual pets giving life advice? While I appreciate the humor in how this article presents AI therapy, it’s clear we have serious conversations ahead about what it means to connect with another being—even if that being is made of code!

This discussion around AI and mental health brings up so many questions! Is there any data showing effectiveness compared to traditional methods? I’m all for innovation but let’s not forget the importance of genuine human interaction when dealing with psychological struggles.

Great point! We definitely need rigorous studies comparing both approaches before jumping headfirst into relying on technology alone.

Exactly! While innovation is exciting, let’s ensure we’re grounded in evidence-based practices for mental health care.

I find the concept of AI in therapy absolutely fascinating! The idea that a machine can help us understand our self-sabotaging behaviors is something I never thought I’d see. As someone who’s struggled with procrastination, the potential for AI to provide real-time feedback is exciting. I’m eager to see how this develops and helps people like me!

While I appreciate the innovative approach of using AI in therapy, I’m not convinced it can replace human connection. There’s something deeply personal about sharing your struggles with another person who can truly empathize. Relying on algorithms seems a bit cold and impersonal to me. Can a machine really understand the nuances of human emotions?

The article raises some interesting points about self-sabotage and how AI could potentially aid in addressing it. It’s noteworthy that cognitive behavioral therapy (CBT) has proven effective, even in digital formats. Studies, such as those from the Journal of Anxiety Disorders, support these claims, suggesting that we might be onto something significant with AI interventions.

I totally agree! The potential for personalized therapy through AI could mean that more people get access to mental health resources than ever before.

But let’s not forget that studies also highlight limitations of digital interactions when it comes to emotional complexity. We can’t dismiss those findings.

‘AI Therapy’ sounds like a gimmick to me. Just another buzzword thrown around in tech circles to make money off people’s vulnerabilities. Will this really help anyone, or is it just a shiny object meant to distract us from traditional therapeutic methods? Count me out.

“A buddy who never sleeps”? That’s comically relatable! If only my real-life friends were as reliable as an AI therapist at midnight! It’s amusing how technology has evolved into a role traditionally filled by humans – though I still prefer my friends for real conversations.

“AI encouraging self-love”—this is laughable at best! How can we trust an algorithm with such an important task? Self-love comes from genuine connections and understanding ourselves deeply; machines cannot fulfill that role no matter how advanced they get.

#WokeMomma, you might be surprised! A lot of teens are turning to apps for mental health support because they feel more comfortable discussing issues anonymously.

#TechSavvyTeen does have a point—sometimes people feel safer sharing their feelings with an anonymous entity rather than face-to-face.

The ethical implications raised regarding bias in AI systems are crucial and often overlooked in discussions about technology’s role in mental health care. How do we ensure these systems are fair? The potential harm caused by poorly trained algorithms cannot be underestimated.

Agreed! This article highlights some serious concerns that need addressing before widespread adoption.

However, isn’t it also true that all technology carries risks? We must weigh the benefits against them.

This post gives me hope for the future of mental health care! If AI can indeed offer tailored solutions based on individual needs, then we’re stepping into uncharted territory where support becomes more accessible than ever before.

As much as I want to believe in this promising future with AI therapy, we must remain realistic about its limitations compared to human therapists who bring empathy and understanding into sessions—an irreplaceable quality when dealing with complex emotions.

Incredible how far we’ve come; however, let’s remember: relying too heavily on technology could lead us down a path where we forget what genuine human interaction feels like—what will our relationships look like if we outsource emotional support entirely?

That’s a valid concern! Balancing tech use while maintaining genuine human connections will be key.

I love how this article breaks down self-sabotage! It’s so relatable, especially the part about perfectionism. I often find myself not starting projects because I’m worried they won’t be perfect. AI therapy sounds like a cool solution to help with that!

While I see the appeal of AI therapy, I’m a bit skeptical. Can a machine really understand human emotions? There’s something about having a real person there that feels comforting. But hey, if it helps even a little, I guess it’s worth exploring!

I totally get your point! But think about it—AI can analyze patterns and provide insights we might overlook ourselves. It could be like having a personal coach that’s always there!

Yeah, but can it really replace human connection? I feel like therapy is as much about the relationship as it is about the techniques.

This gives me hope! Self-sabotage has been my arch-nemesis for years. The idea of AI helping me recognize my patterns and providing tailored feedback sounds amazing! Definitely considering giving it a shot.

Just make sure to manage your expectations! It’s not magic; it’s just another tool in the toolbox.

@CautiousCarl True, but every tool has its place! If it helps one person even slightly, that’s worth it!

“Anonymous Hug”—that’s such an interesting term for AI therapy! It makes sense though; sometimes we need support without judgment or fear of being vulnerable in front of someone else.

“Compassion Cultivation 101″—what an intriguing concept! If AI can help us practice self-love and compassion toward ourselves, that could really change lives!

I find the concept of AI therapy really intriguing! Self-sabotage is such a tough nut to crack. I often catch myself procrastinating and wondering if an AI could help me identify those patterns better than I can. Has anyone tried it? What was your experience like?

I tried an AI therapy app for a month! It definitely helped me recognize my triggers for self-sabotage, especially with procrastination. The feedback was quick and insightful. Worth giving it a shot!

I appreciate the exploration of how AI can tackle self-sabotage! However, I’m still skeptical about fully relying on technology for mental health. There’s something about human connection that feels irreplaceable. Can AI really understand our complexities?

AI therapy sounds like a cool concept, but it’s also kinda funny to think about a robot giving you life advice! Like, ‘Hey, stop sabotaging yourself!’ Haha! But seriously, if it works, I’m all for anything that helps us get out of our own way.

‘Advice from a robot? That’s rich! But hey, if it helps people avoid their inner critic’s harshness, maybe it’s worth trying out in moderation.

‘Compassion Cultivation 101’ sounds like something everyone needs! If AI can encourage more self-love and awareness around self-sabotage, then count me in! We all deserve to be kinder to ourselves.

The article touches on important issues about self-sabotage and how pervasive it is in our lives today. It seems that with the right support system—be it human or AI—we can start breaking those chains that hold us back from achieving our goals.

While I see the potential in using AI for mental health support, I can’t help but feel we need human therapists too. They have nuances that machines may not capture fully. Have any studies shown long-term effectiveness?

I love the idea of being able to access therapy whenever I need it without worrying about costs or availability! But will there be limitations on what issues an AI can actually help with? Just curious!

This article made me laugh—AI telling us not to self-sabotage is like my toaster judging my breakfast choices! 😂 But hey, if they can provide helpful insights and keep us accountable, why not give it a go?